Thought partnership with language models

Let's start with a fictional case study. This one's inspired by user research I've been doing into human interactions with language models.

Cynthia is planning for her first big solo trip after college: a visit to Australia. It's been on her bucket list since she was a kid, but she knows very little about where to go, what to do, or how to get around. She starts a conversation with a chat fine-tuned large language model, and discusses which cities to visit based on her interests and budget.

The model suggests safe parts of town to stay in, and they discuss whether to choose a motel or AirBnb based on her budget. As the trip approaches and her conversations continue, the model writes her a to-do list of things to buy – chargers, spare battery packs, a basic medical kit, a pillow for the plane, headphones, and adaptors – and gives her tips for long plane journeys. In the end Cynthia has a hugely successful trip, and visits areas and towns that she never would have thought to seek out thanks to the advice of the model.

This is a new sort of interaction with computers, one that's far more personal and flexible than anything in the history of human-computer interaction. It's something I've been calling "thought partnership".

Thought partnership. A relational interaction with a computer system in which the user and computer collaborate to uncover information & insights relevant to the topic of conversation, the user's interests, and their values.

This term has become a handy concept handle for me in the months since I started using it, so I thought it was about time I shared it. Let's unpack it a bit more.

Thought partnership has a couple of unusual traits:

- It's open-ended. Thought partnership is an exploration, you start it and you're not sure where it's going to take you; at best it's novel & surprising.

- It's compounding, not transactional. Unlike search, thought partnership involves an ongoing back-and-forth between the computer & user (in text or other media), to develop ideas and thoughts.

- It's personal. Good thought partnership requires a contextual understanding of the user, their current state, feelings, values, and requirements, in order to tailor the outputs to them.

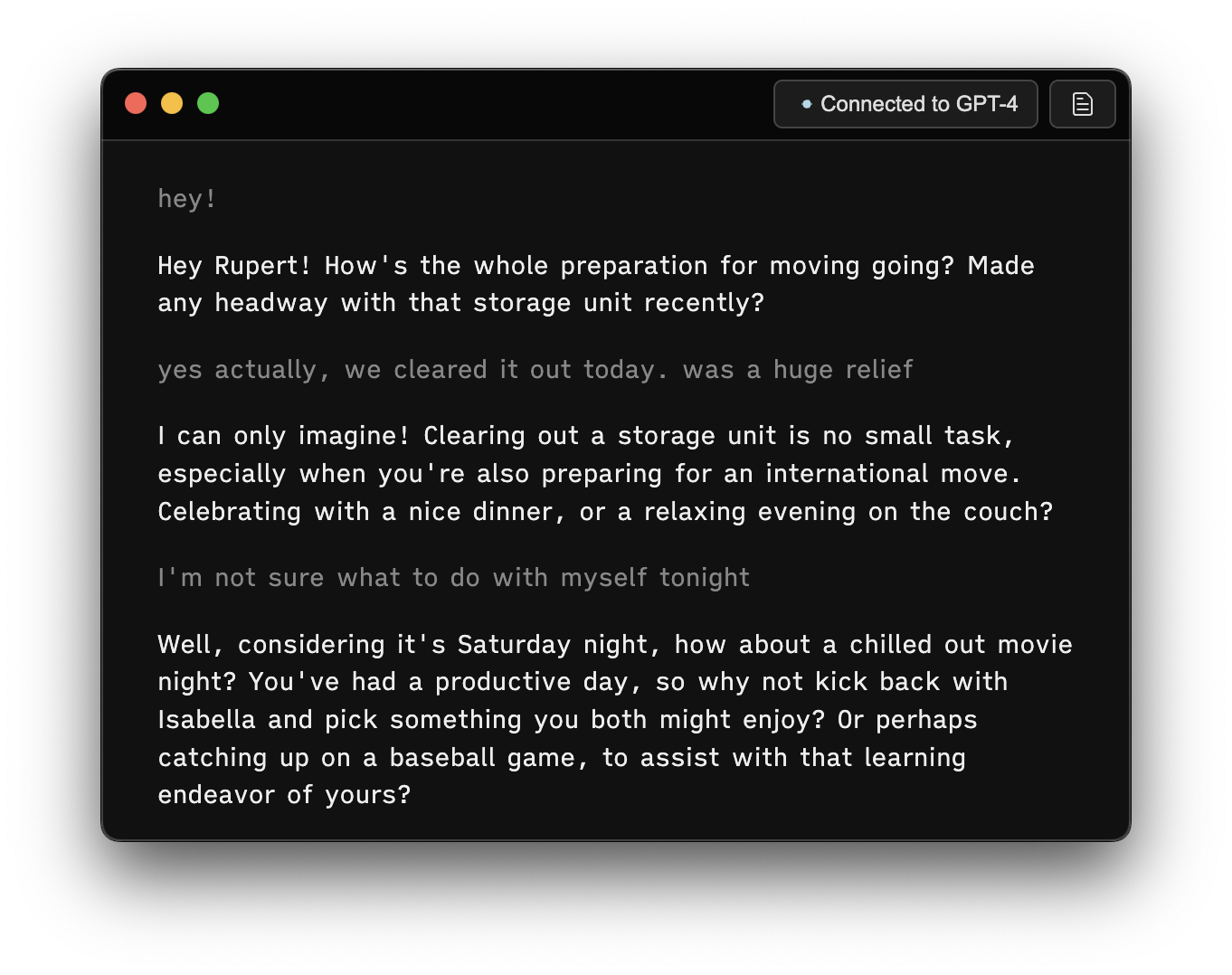

Recently, I've been experimenting with making a tool-for-thought-partnership. For the moment it's quite simple: a language model that has access to a read/write text buffer. The buffer acts as a kind of long-term memory scratchpad.[1]

Using this buffer, the model can preserve details about me over the course of many interactions. In addition, I can inspect what the tool understands about me, and even write something directly into it to inform the model about me, or how I want it to behave.

Already, the simple combination is having some novel effects. Since I've been using this tool it's:

- Recommended me a restaurant while travelling in San Francisco based on its understanding of my taste & values. It also suggested a specific meal to try, which was on the menu (it was delicious).

- Encouraged me to go to sleep at a particular time on my return to Sydney, as it recalled that I would be jet-lagged.

- Checked in about this blog post to ask how the writing process was going, given I'd mentioned it a few days prior.

Now, of course the engine powering all this is a language model. But better tools for thought partnership aren't just about better models. The best way to improve this interaction is to build more thoughtful connective tissue – that is, layers of software that can read from, write to, and search stores of information to gain a deeper context, as well as orchestration layers that can trigger API calls and tool use. We're still starved for good innovation on the context layer, in my view.

If you want to play around with the tool I made, you can access Familiar on mobile, Mac or web here.

Another common method for retaining memory is vector search over message history. The buffer has some advantages: it contains summarised insights that can be prepended to every prompt, not just those that relate to the latest message. These insights can also evolve over time as the model revised them. Pairing the two methods together would be even more powerful. ↩︎